Emergency Sign Language Translator

Project Goals:

- Reasonable accuracy.

- Robust:

- Singer independent.

- Background independent.

- Real time performance.

- A simple platform without the use of expensive devices.

- The system should recognize sign language for a wide variety of people.

The Chosen Algorithm:

CNN-

Inspired by biological data taken from physiological experiments

performed on the visual cortex, convolutional neural network is a deep

learning method that generates just enough weights needed in order to

scan a small area of an image at any given time. This approach is beneficial

for the relatively low amount of parameters within the network.

Additionally, since the model requires less amount of data, it is also able to

train faster.

Ran Malach Amit Mor

Advisor: Ms. Karin Bociek

Electrical Engineering

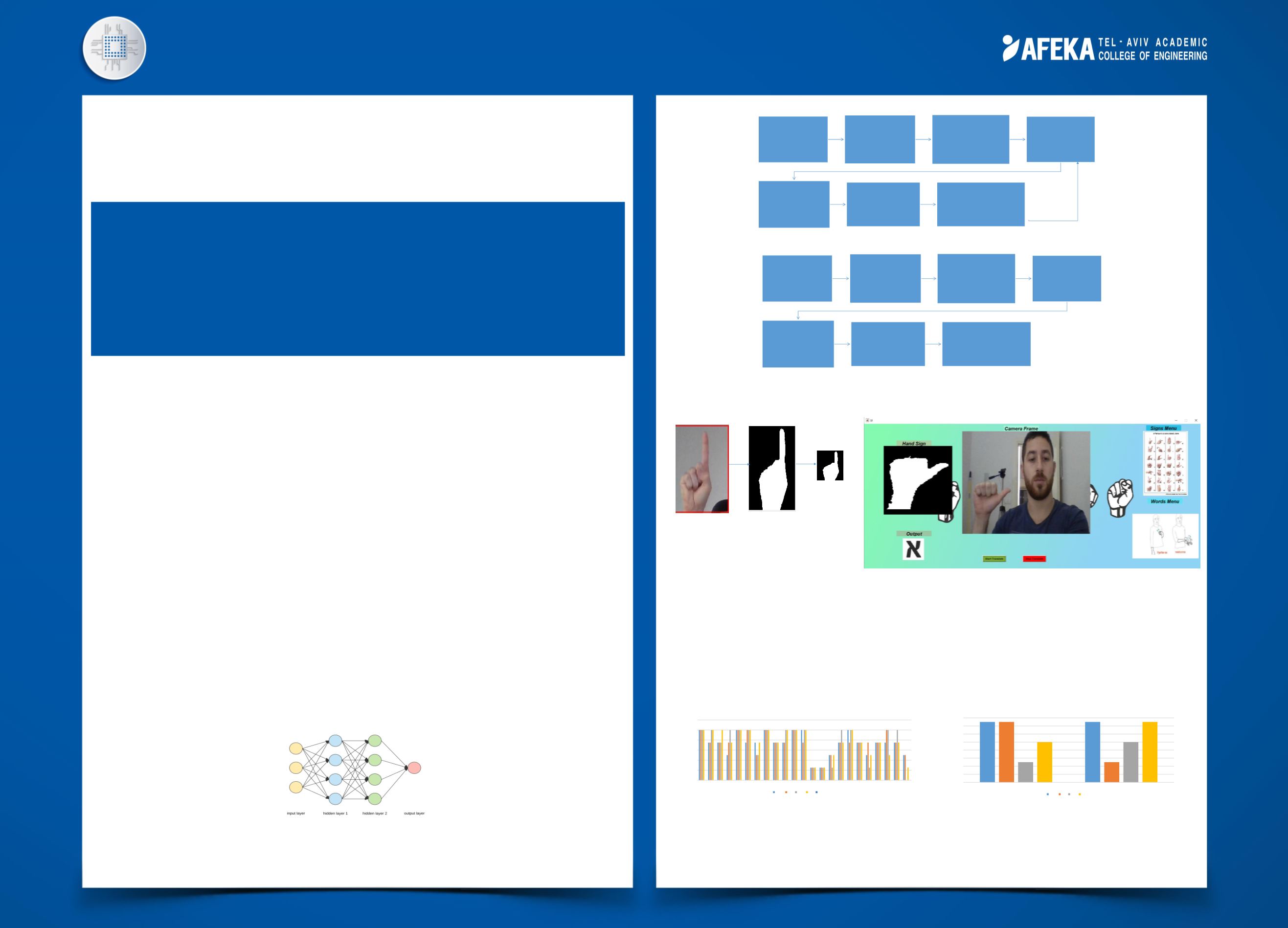

Translation Flow:

Training Flow:

System Outcomes:

Crop and Segment hand: GUI and sign recognition:

System Outcomes:

We did a system check on 4 people with different color skin, each one test the application by

presenting 4 times each one of the sign and we write down every time our system correctly

identified the sign. The system correctly identified: 73.25%.

This project deals with the conversion of Israeli sign language

to text output. The purpose of this project is to make the

sign language accessible and allow the hearing impaired to

communicate with the surrounding environment. The

working method is based on writing an Image processing and

Deep Learning algorithm in MATLAB.

stand in front of the

camera and open

MATLAB GUI

press start translate

on the GUI

Frames will be

sampled in a loop

Segmente the palm

or the hands and

create a binary

image for the sign

Insert the Binary

cropped image to

the CNN model for

prediction

classify the prediction

by label

The output is the letter or

word that the app

recognized

Capture the person doing

the sign on a uniform

background

Run the function "Crop&Save

sign name" ,extract the hand

and attach a title

after titling the signs, the

function will save the croped

image in a diractory called

"Letters"

Run "write BW images to lib

BW" this will preform a

segmentation of skin color

on the hand to make it a

binary image. Resize the

image to 128X128 pixles

After all the binary images

are in the BW directory, run

"augmentate" function that

will preform rotate, scale ,pan

and tilt on the binary image

in order to robust the data set

Run the "Training.m" to train

the network

The output of the training prints out

a chart that shows how good the

prediction is. Based on ~75% of the

Data Set images for training while the

rest for Validation

0

0.2

0.4

0.6

0.8

1

1.2

ג ב א

ו ה ד

ז

י ט ח

נ מ ל כ

'ש ש ר ק צ פ ע ס

ת

Statistics- letters

Amit Nir Ran Tal

Column1

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

מה שלומך

?

עזרה

Statistics- words

Amit Nir Ran Tal