Improving Language Models and Speech

Recognition using Character Aware Deep Learning

Oshri Mahlev – Software Engineering,

Ohad Volk – Industrial Engineering

Advisor: Dr. Gadi Pinkas

Language modeling is a key factor in almost every Natural

Language Processing task, specifically it can be integrated

into Speech Recognition process.

By using character awareness and subword information we

improved language modeling and propose a novel approach

to improve the rescoring process in speech recognition.

I. Improving LM using LSTM and combining Subword Information and Character

___

Awareness techniques.

II. Improving the Speech Recognition rescoring process.

Test -

PPL

Validation -

PPL

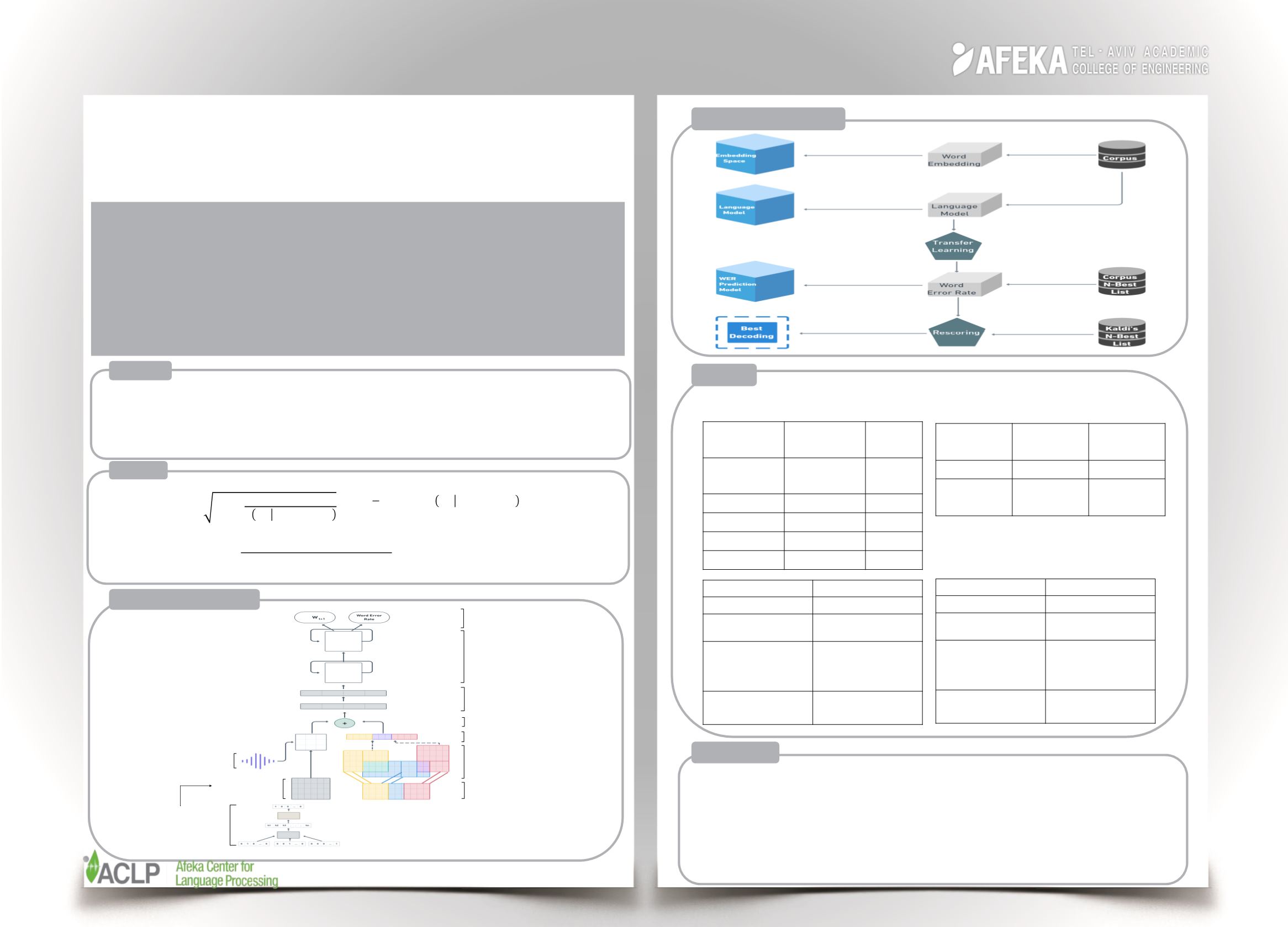

Model

76.5

81.5

4-gram LM

RNN

81.6

85.8

C-RNN

76

81

F-RNN

68

54.86

Baseline

64.5

36.45

Our Model

Test - PPL

Validation

- PPL

Model

105

245

Baseline

96

201

Our Model

Test – WER

Model

25.84%

Kaldi’s top 1

28.3%

Our model top 1

24.26%

Best of Kaldi’s

top 1 + Our

model’s top 1

22.57%

Best of Kaldi

top 2

English

Hebrew

Test – WER

Model

13.51%

Kaldi’s top 1

13.98%

Our model top 1

12.29%

Best of Kaldi’s

top 1 + Our

model’s top 1

12.23%

Best of Kaldi

top 2

System Architecture

Results

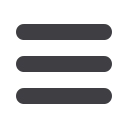

FastText

Embedding

Character

Embedding

Convolution

Addition

Highway

LSTM

Layers

Max Pooling

Acoustic

Features

Pre-Training

FastText

Output

Network Architecture

Goals

=

=1

1

1

…

−1

= 2

−

1

=1

log

2

1

…

−1

=

+

+

Metrics

Incorporating subword information can improve language model performance,

including morphological rich languages, such as Hebrew.

Rescoring while using WER prediction didn’t improve directly Kaldi’s

performance, but there is an improvement potential by using an ensemble that

will combine the two methods together.

Conclusions